About me

I am currently a PhD candidate at École de technologie supérieure (ÉTS), Montreal. There, I am working on deep learning in vision applications with Prof. Eric Granger and Prof. Mohammadhadi Shateri. My focus is on adapting and optimizing generative models. I particularly build solutions for customized and efficient generation in multimodal and low-data settings.

Previously, I obtained a Masters degree in Artificial Intelligence (AI) at the University of Tehran. There I worked as a research assistant in the Machine Learning (ML) Lab under the supervision of Prof. Amin Sadeghi on foundational Deep Learning (DL) and explainability in vision applications. My Master's thesis was titled “When and where to perform regularization in the training of deep learning models?”. I obtained my Bachelor's degree from the Isfahan University of Technology where I studied Computer Engineering.

Je suis actuellement doctorant à l’École de technologie supérieure (ÉTS), à Montréal. J’y travaille sur l’Apprentissage profond appliqué à la vision, sous la direction du Prof. Éric Granger et du Prof. Mohammadhadi Shateri. Mes travaux portent sur l’adaptation et l’optimisation des modèles génératifs. Je développe notamment des solutions pour une génération personnalisée et efficace dans des contextes multimodaux et à faibles données.

Auparavant, j’ai obtenu un master en intelligence artificielle (IA) à l’ Université de Téhéran. J’y ai travaillé comme assistant de recherche au laboratoire d’apprentissage automatique, sous la supervision du Prof. Amin Sadeghi, sur les fondements de l’apprentissage profond et l’explicabilité en vision. Mon mémoire de master s’intitulait : « Quand et où appliquer la régularisation lors de l’entraînement de modèles d’apprentissage profond ? ». J’ai obtenu mon baccalauréat à l’ Université de Technologie d’Ispahan, où j’ai étudié le génie informatique.

CV

Download my full CV here. (Last update: 10 Dec 2025)

Publications

Teaching

Teaching Assistant for Graduate University Courses

University of Tehran, School of Electrical and Computer Engineering, 2021-2023

- Deep Generative Models (Lead TA) - Fall 2022

- Machine Learning (TA) - Fall 2022

- Advanced Deep Learning (TA) - Spring 2022

- Data Analytics and Visualization (Lead TA) - Fall 2021

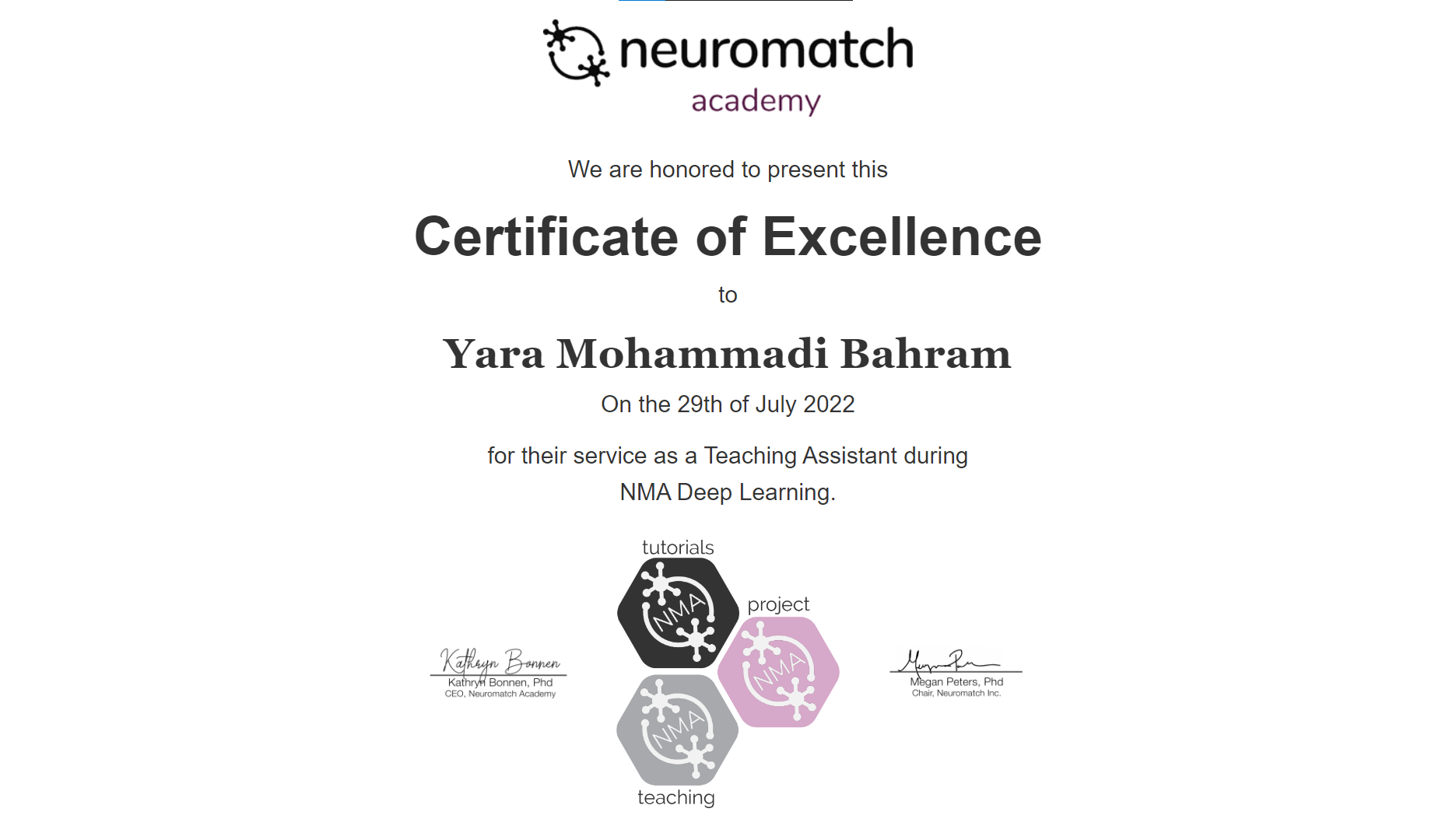

Teaching Assistant at the Neuromatch Academy Deep Learning Summerschool

Online, 2022-07

I was selected as TA for full-time supervision on the learning of 16 students from different countries, and leading 2 research group projects during 3 weeks. The topics covered a wide and comprehensive range of Deep Learning subjects (Curriculum).

Mentor at HooshBaaz Data Analytics Summer Bootcamp

University of Tehran, School of Electrical and Computer Engineering, 2022-07

Group collaboration with 7 other mentors for educating 80 students during 14 data science workshops. (LinkedIn, Github)

Personal Teaching Experience

Online, 2021-07

Created over 2 hours of tutorials on Machine Learning with Python in Persian language with Hands-On exercises. The tutorial covered the basics of machine learning, the primary methods and models of classification and regression, and dimensionality reduction. I published the videos on Youtube and the codes on Github.

Projects

Last update 2024

Deep Learning and Machine Learning

All projects on Github here.

Trustworthiness:

- Adverserial Training vs. Angular Loss for Robust Classification

- SHAP, LIME, and D-RISE Explanations

- Backdoor attacks and OOD detection

Generative Models:

- Timestep-Wise Regularization for VAE on Persian Text

- BigBiGAN analysis + Combining it with InfoGAN

Self-Supervised Learning:

- Autoencoders and PixelCNN for Downstream Tasks

- Contrastive Predictive Coding

- SimCLR Analysis

- Unsupervised Representation Learning via Rotation Prediction

Embeddings:

- Visual Question Answering

- Transfer Learning using EfficientNet-B0

- Multimodal Movie Genre Classification

Vision:

- Efficient Instance Segmentation of pathology images via Patch-Based CNN (Related to my bachelor’s thesis)

Data_Projects

All projects on Github here.

Data Pipeline (Large-scale project)

- A real-time BigData system for monitoring, analysis, and prediction of online Persian Tweets

NoSQL

- Working with NoSQL Databases (MongoDB, Neo4j, Cassandra, and Elasticsearch)

Spark and GraphX

- Text analysis, logFile mining, stock market analysis, and Wikipedia analysis

Data Analytics

- Crawling static and interactive Iranian webpages

- Spotify data gathering + data analysis + Recommender system